Savant gives developers a highly efficient inference based on TensorRT, which you usually must use when developing efficient pipelines. However, because of the particular need, you may need to integrate the Savant pipeline with another inference technology. In the article, we show how Savant integrates with GPU-accelerated PyTorch inference.

You can also use the approach if you are PyTorch-centric and happy with it but need efficient infrastructure for video processing: transfer, decoding, and encoding.

First, you must appreciate that for a model, even CUDA-accelerated PyTorch inference is almost twofold slower than TensorRT. Thus, using PyTorch is no potential benefit if you are not under certain constraints.

Let us first consider the reasons for such integration. We see three reasons for using PyTorch:

- Prototyping and reuse of the existing code without significant modifications;

- Model incompatibility with TensorRT;

- Determinism requirement.

How does PyTorch Inference Win When served with Savant?

In Savant, we provide API to work with video frames allocated in GPU memory without downloading them to the CPU. They are represented with OpenCV GpuMat objects, so you can modify images, crop, transform, and run other CUDA-accelerated operations efficiently.

Frame Modification Limitations

The whole frame is available as GpuMat. When working with it, you must avoid changing the geometry and data layout because the system does not expect it. If you need to preprocess the image before passing it to PyTorch, you must duplicate or crop the required area and do anything you need with the copy object.

Savant provides developers with various approaches to deal with graphics modification. For short-term modification, you can rely on the above-discussed approach or use image preprocessing when TensorRT inference is used. Long-term modifications enduring throughout the pipeline with auxiliary frame padding.

With existing tools, you can transform GpuMat data between GpuMat, CuPy, or PyTorch tensors without downloading to the CPU. Thus, when you want to process the data with PyTorch in Savant, you must implement the following steps:

- Crop required areas of the frame (OpenCV CUDA);

- Implement required preprocessing (OpenCV CUDA, TorchVision, or CuPy);

- Pass the batch the PyTorch CUDA-accelerated inference;

- Retrieve a PyTorch tensors representing the inferred data;

- Implement required preprocessing with (TorchVision, or CuPy);

- Download it to the CPU and ingest it in the metadata or third-party storage.

When running the operations from the list, consider using CUDA streams to avoid synchronous blocking operations when possible.

To sum up, PyTorch wins from using Savant by accessing raw video frames most efficiently – in the GPU RAM without downloading them to the CPU RAM. The OpenCV-based reading or GPU-accelerated decoding implemented in the Torchaudio extension does not provide comparable performance and resource utilization.

Savant is great at delivering ready-to-use pipelines fast: it provides all required infrastructure to access data sources and sinks, transfer video data through networks, scale processing, and instrument distributed pipelines with OpenTelemetry and Prometheus. So, even if you do not want to use Nvinfer, your application can benefit if you deploy your PyTorch inference within Savant infrastructure.

What is The Role of PyTorch Inference Integration for Savant?

PyTorch is one of the most known DeepLearning solutions in the world, alongside PyTorch and Keras. Its functionality is way broader than that provided by TensorRT and NVInfer. With PyTorch, you can infer and train models and use advanced algorithms implemented for data processing. Also, it does not focus on computer vision but can serve models to predict data in other modalities; thus, you can use it to handle auxiliary data delivered with video data in attributes.

From the computer vision perspective, some models may utilize features not supported by TensorRT yet. PyTorch can help you to deploy these models in the Savant pipeline efficiently.

PyTorchHub is an excellent source for ready-to-use models. When you need to demonstrate the end-to-end solution quickly but do not need the peak performance, you can use the PyTorchHub and Savant infrastructure components models to build a demo in hours, not days.

Asynchronous PyTorch Model Serving

You probably have seen articles describing how to serve the PyTorch model with FastAPI. Well, what you read in the section will demonstrate a better way to do that with Savant.

Savant is great not only for video processing but also for image processing. We provide ClientSDK: a toolkit for sending images and retrieving results from the pipeline in a completely asynchronous way. With ClientSDK, you can implement picture processing really efficiently.

If you previously programmed model-serving API, you know the problems happening with naive synchronous approaches:

- When the traffic bursts, you encounter unpredicted delays in serving; thus, clients may wait for server responses for many seconds, leading to premature connection termination and server resource waste.

- Multiple serving server instances evaluated in parallel are inefficient because model inference usually consumes all the resources available; thus, parallelization only increases the processing time for every request.

You must reserve many resources upfront or implement asynchronous processing or polling to overcome the limitations. Savant has its way of implementing such processing: ClientSDK provides a source and sink – two iterator-like classes, allowing the developer to ingest data and retrieve results entirely asynchronously. When the sink reads data, it can use the OTLP trace ID installed by the source to associate responses with requests. The external, client-facing interface can be arbitrary, let us say, implemented with FastAPI.

Savant supports streaming out of the box; therefore, all images ingested with the same source ID are processed within the same session, allowing the implementation of a processing state.

PyTorch Sample

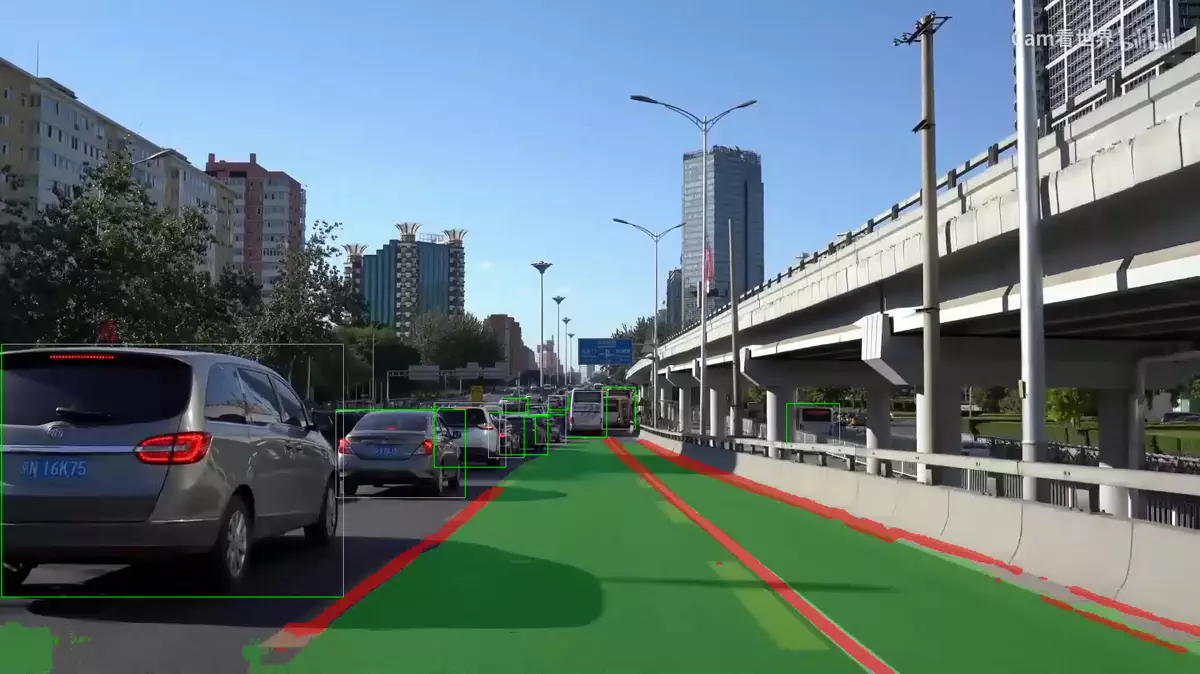

We prepared a sample demonstrating the use of PyTorch in the Savant pipeline. The sample uses a prebuilt model from TorchHub related to autonomous driving (hustvl/yolop). It supports real-time serving 2-3 cams on a state-of-the-art Nvidia GPU.

The sample source code is located in the Savant repository by the link.

Don’t forget to subscribe to our X to receive updates on Savant. Also, we have Discord, where we help users.

To know more about Savant, read our article: Ten reasons to consider Savant for your computer vision project.