Media Pass-Through allows copying the incoming video stream to output without transcoding. The use of the feature is beneficial if the pipeline does not use draw functionality or it occupies a separate pipeline in a chain.

In this mode, the incoming video frames are copied directly to the output with modified metadata.

Feature Idea

Recently Nvidia started to produce specialized hardware without NVENC devices. Such devices do not allow encoding GPU-allocated frames efficiently (and cheap). For example, the most affordable edge device Nvidia Jetson Orin Nano comes without NVNEC, and the most powerful data center accelerators V100 and A100 also do not contain NVENC on board.

Passing raw frames between GPU and CPU for encoding is inefficient as it easily saturates PCI-E and introduces a significant performance slump; CPU-based encoding is also not a default choice for Savant users. To say more, many users do not require drawing functionality or implement it separately in an auxiliary pipeline chained with the video analytics. When we identified the cases we decided to implement the Video Pass-Through mode of operation.

Devices Without NVENC

The following hardware comes with no NVENC on board:

- Nvidia Jetson Orin Nano;

- Nvidia Tesla V100, A30, A100, H100;

The full and up-to-date list of hardware can be found in the link.

How To Configure Video Pass-Through

To configure pass-through mode, set parameters.output_frame.codec to copy:

parameters:

output_frame:

codec: copyYou still need to configure the internal processing resolution to cast all streams to a common size:

parameters:

frame:

width: 1280

height: 720You can use auxiliary padding for internal purposes; however, at the end of the pipeline, the coordinates for all objects will be transformed automatically back to the original resolution on a per-stream basis.

Usage Example

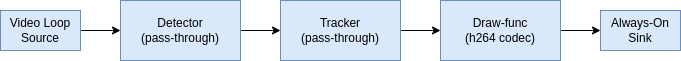

We created an example demonstrating this feature in the context of pipeline chaining – a technique allowing you to create distributed pipelines utilizing multiple hardware (for example, a hybrid edge/core processing). The sample is portrayed in the following image:

As you can see, there are 5 separate docker images, the inner three represent the AI pipeline, and the two of them are configured to pass through the video.

Investigate the sample in the Savant repository.

Don’t forget to subscribe to our X to receive updates on Savant. Also, we have Discord, where we help users.