Cameras play the most important role in computer vision projects. Often, the quality of the picture captured by a camera influences way more than the sophistication of the computer vision models used in the solution. The truth is that without a proper camera, implementing a state-of-the-art solution matching business needs is often impossible. For example, facial and optical character recognition applications require high-quality, expensive cameras to deliver a pixel-perfect image to the system. Often, computer vision engineers and product sponsors/owners do not understand the characteristics and meet the situation when the solution is not even possible in the wild.

Every camera has many properties that define its capabilities. Certain properties are related to the camera software, while others are wired into the hardware. In the article, I will discuss three major properties of any camera that heavily influence its application in a particular solution. I will observe frequent misconceptions we discuss with developers and business users.

Let us split all the computer vision tasks into two groups:

- Requiring a large field of view to solve the problem.

- Requiring a high pixel density to solve the problem.

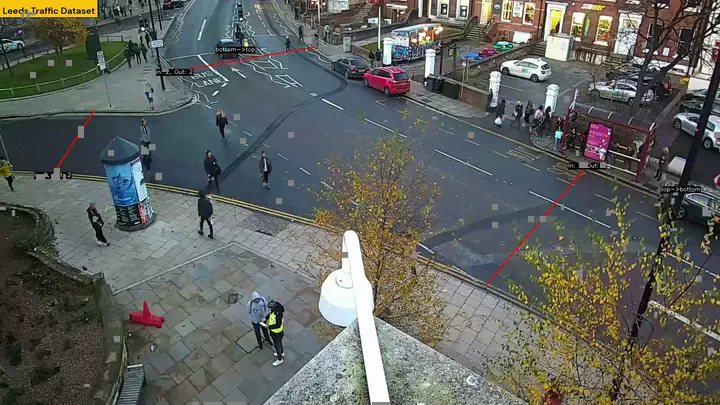

An example of the first class is a traffic monitoring system depicted in the following image:

Such a system requires a large FOV to cover the entire area in a simplified way. Sometimes, when a single camera cannot cover the whole area, a multi-cam approach can be used.

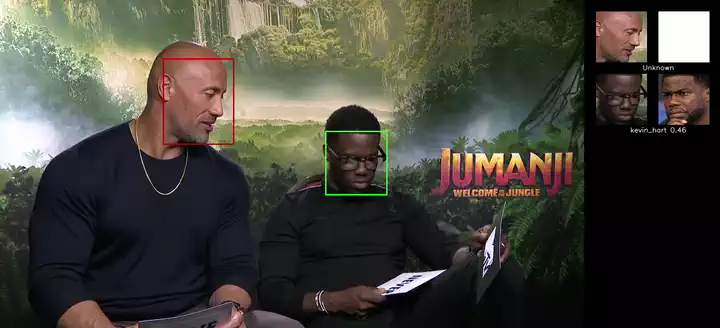

An example of the second class is a facial recognition system depicted in the following image:

Such a system does not need to see a broad space, but it requires a very clear picture in its FOV and high pixel density to ensure that a crop is still of high fidelity.

Of course, the most complex projects are at the crossroads of the class one and class two systems, requiring not only a large FOV but also a high pixel density.

My experience is that noob computer vision engineers and product owners do not distinguish between the two cases, using their intuition incorrectly. Their assumption is that if they buy a camera with a high resolution, say 8MP (4K), it will be a silver bullet for any project. However, they are not correct.

Let us decompose any camera into four major components:

- sensor;

- lens;

- chip;

- software.

Every above-mentioned component has a huge impact on the picture quality. Let’s check how they influence and what their responsibilities are in more detail.

Sensor

The sensor captures photons and provides a picture to a chip, which transforms electrical signals into a data protocol. Sensors are rocket-science-class devices that have been evolving for many decades. Every sensor has a huge number of characteristics defining its properties and cost. The most critical characteristics include:

Resolution (Megapixels). The number of pixels the sensor can capture. Higher resolution means more detail in the image, allowing for larger prints or more room for cropping without losing quality.

Sensor Size. The physical dimensions of the sensor (e.g., Full Frame, APS-C, Micro Four Thirds). Larger sensors generally capture more light and provide better image quality, with improved dynamic range and lower noise in low-light conditions.

Dynamic Range. The range between the darkest and brightest parts of an image that the sensor can capture without losing detail. A high dynamic range allows for better handling of scenes with a wide range of lighting, preserving detail in both shadows and highlights.

Pixel Size. The size of individual pixels on the sensor. Larger pixels can capture more light, improving performance in low-light conditions and reducing noise.

Sensitivity (ISO Performance). The sensor’s ability to capture images in different lighting conditions. A sensor with good high ISO performance can take better pictures in low light without excessive noise.

Color Depth. The range of colors that the sensor can capture. Higher color depth allows for more accurate color reproduction and smoother color transitions.

Signal-to-Noise Ratio (SNR). The measure of signal (image data) relative to noise in the image. A higher SNR results in clearer images with less noise, particularly in low-light conditions.

Frame Rate and Readout Speed. How quickly the sensor can capture consecutive frames. Important for video and fast-action photography; higher frame rates allow for smoother video and better capture of fast-moving subjects.

Shutter Type (Global vs. Rolling Shutter). The way the sensor reads data (all at once or sequentially). Global shutters can avoid distortions like the “rolling shutter” effect seen in fast-moving scenes.

Low-Light Performance. The sensor’s ability to capture usable images in poor lighting conditions. Sensors with strong low-light performance can produce less noisy images with better detail and color accuracy in dim environments.

Lens Compatibility. The sensor’s ability to work effectively with different types of lenses. Some sensors are optimized for certain lens mounts or have crop factors that influence effective focal length.

Sensor Technology (e.g., CMOS, CCD, BSI). The type of sensor technology used. Technologies like BSI (backside-illuminated sensors) offer better light capture and can improve performance in low light compared to traditional sensors.

Every camera is made for a purpose: cheap CCTV cameras have cheap sensors, industrial cameras have expensive, top-notch sensors and cost a lot. A sensor is the heart of a camera; thus, no matter how you try, if the sensor cannot provide enough quality captured images, you will fail.

You must appreciate that every characteristic of the above-listed ones plays a significant role in a particular task.

Here, we encounter the first mistake that developers and product owners make: they watch only on megapixels because the other properties are meaningless to them. They think the camera will be universally better if the number of MPs is higher.

That is the first misconception: there are industrial cameras with 720p and even smaller resolutions that capture 120 FPS crystal-clear images for high-speed moving objects in low-light conditions, and there are 8K cameras that fail to get a good enough picture for a fast-moving object, even for the best possible, highly illuminated scene.

Summary: sensor resolution is only one of more than a dozen characteristics influencing a picture’s quality.

Lens

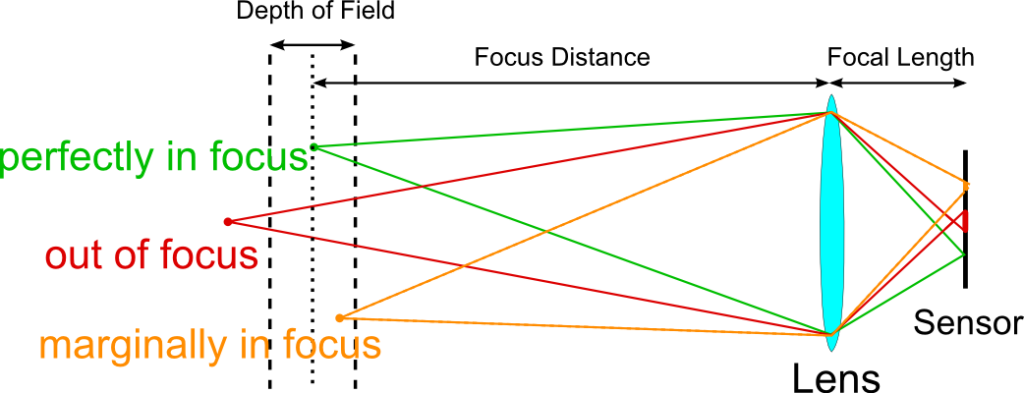

The sensor without a lens is worthless. Lenses map the outer space to a sensor crystal:

Without a lens, a sensor receives chaotic light and produces no good. The quality and properties of an objective (a device implementing a particular lens system) define what FOV is mapped to a sensor, how much light passes, which waves are filtered (e.g., IR filter), and which pass.

Objectives are very complex and high-precision optical devices that can have:

- fixed focus;

- variable focus (manual or motorized).

High-quality objectives can easily cost several thousand USD. By distortion, all lenses can be grouped into several classes:

- narrow-angle low distortion lenses;

- wide-angle medium distortion lenses;

- ultra-wide-angle high-distortion lenses (fish-eye).

Depending on the task class, you may need a particular lens.

So, with the right lens, even with a 640×480 sensor, you can capture a face at a 100-meter distance or not be able to do that for a 4K-resolution sensor at a 3-meter distance.

Summary: a lens defines the distance and breadth of a camera. With improper lenses, it does not matter how many Megapixels a sensor has; you will not be able to get a high-quality picture. With the right lens, even a low-resolution sensor will solve the task.

Chip

A camera chip transforms electric signals from the sensor into beautiful RGB and YUV images. To do that, a chip can integrate a set of DSPs with proper IP cores or do it programmatically. Look at the following YouTube video (technically complex) showcasing a discussion on how such processing is implemented in Raspicam (Raspberry PI):

The quality of such DSP processors or in-software algorithms, together with chip design ensuring its stable operation in different environmental conditions, define the quality of RGB/YUV pictures, which will be sent to a host system via application protocols.

Software

RAW images occupy a lot of space (an 8-bit RGB image for FullHD occupies almost 6 MB). Assuming that the camera wants to send a stream at 30FPS to a host, the bandwidth required for such a transfer equals 178 MB/sec. For a 4K image – 711 MB/sec, for an 8K – enormous 2847 MB/sec. To overcome such a demand, cameras often transfer video streams in a compressed lossy format – MJPEG, H264, or HEVC. Such an encoding requires a special ASIC or in-software implementation. Depending on the ASIC IP core or the software and onboard CPU performance, this step can result in increased latency, a decline in quality, and even visual picture artifacts. Sometimes, the CPU cannot even encode video with a high-quality profile, and later, decoded pictures become grainy or corrupted.

Trusted vendors like Axis, HikVision, or Dahua ensure the quality in a broad range of modes; meanwhile, unknown white-label vendors may install weak CPUs and develop poor-quality algorithms based on mediocre technology, not requiring significant investments in innovations.

Final Thoughts

There is no such thing as a camera that fits all tasks. Often, cameras that have already been installed cannot solve tasks because their initial purpose does not fit a task under development.

When possible, stick to specialized cameras with medium resolution and high-quality global shutter sensors and high-quality lenses rather than CCTV high-resolution cameras.

If you need the best possible quality, look at the following, from most preferred to least preferred:

- GigE Vision Industrial cameras;

- High-quality USB/CSI cameras with properly chosen lenses;

- Commodity CCTV cameras.

Why Lower Resolution?

Lower resolution requires fewer computing resources on the camera and host sides. As a result, the software works faster because of more economical memory management and transfer (e.g., for CUDA). Hardware-assisted encoders/decoders also utilize less resources. Image scalers before AI-inference units scale faster and do not become a bottleneck.

Motorized Objectives Rule Them All

In tasks when you need to combine a large FOV for one operational mode and high-precision/quality for another, consider using motorized objectives to transition between different camera properties dynamically.